Access Control & Resource Sharing

Access tokens are used to authenticate and authorize requests to the API.

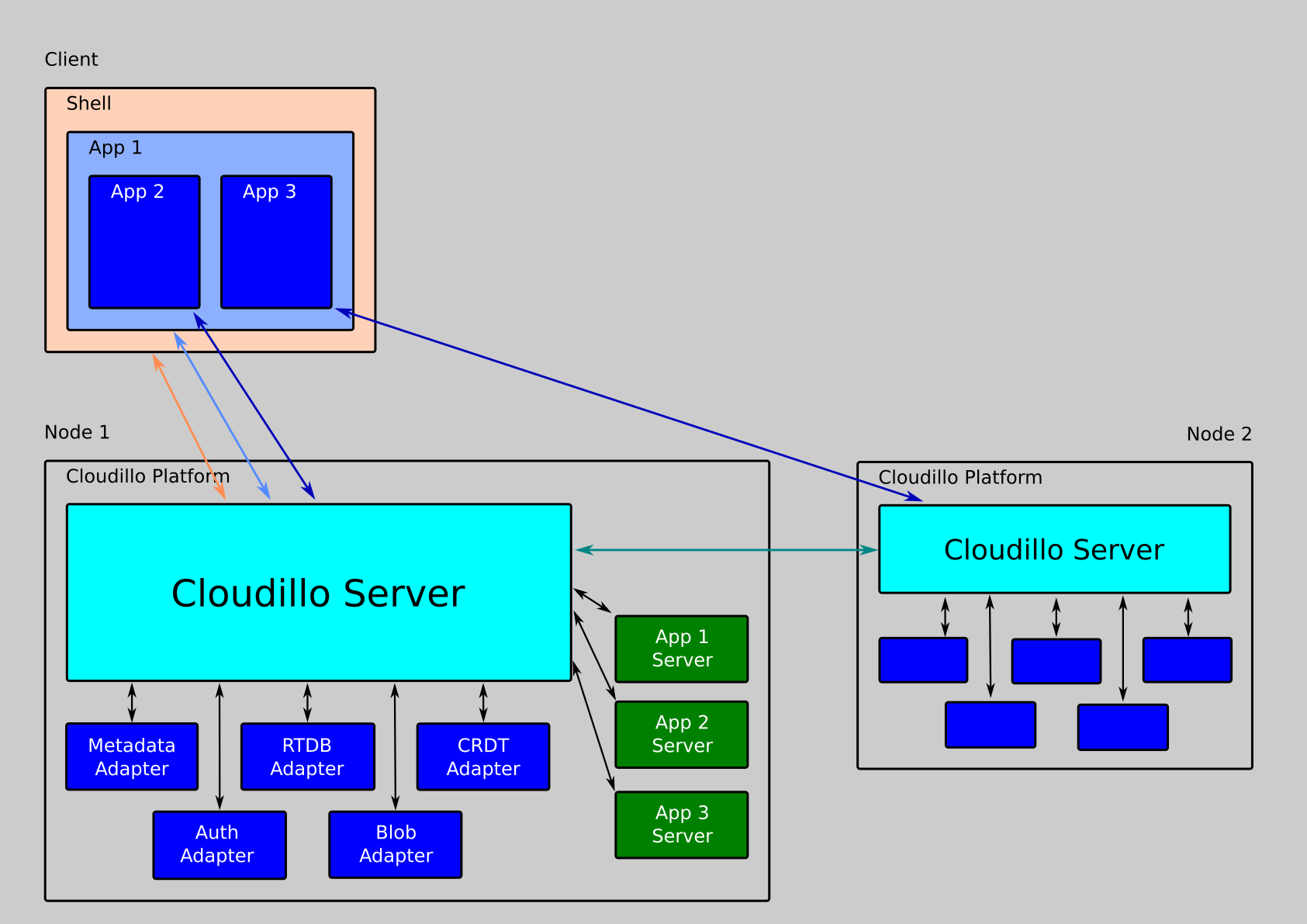

They are usually bound to a resource, which can reside on any node within the Cloudillo network.

Token Types

Cloudillo uses different token types for different purposes:

AccessToken

Session tokens for authenticated API requests.

Purpose: Grant a client access to specific resources

Format: JWT (JSON Web Token)

Lifetime: 1-24 hours (configurable)

JWT Claims:

{

"sub": "alice.example.com", // Subject (user identity)

"aud": "bob.example.com", // Audience (target identity)

"exp": 1738483200, // Expiration timestamp

"iat": 1738396800, // Issued at timestamp

"scope": "resource_id" // Scope (resource identifier)

}

ActionToken

Cryptographically signed tokens representing user actions (see Actions.

Purpose: Federated activity distribution

Format: JWT signed with profile key (ES384)

Lifetime: Permanent (immutable, content-addressed)

ProxyToken

Inter-instance authentication tokens.

Purpose: Get access tokens from a remote instance (on behalf of a user)

Format: JWT

Lifetime: Short-lived (1-5 minutes)

JWT Claims:

{

"sub": "alice.example.com", // Requesting identity

"aud": "bob.example.com", // Target identity

"exp": 1738400000,

"iat": 1738396800,

"scope": "resource_id" // Scope (resource identifier)

}

Access Token Lifecycle

1. Token Request

Client requests an access token from their node.

2. Token Generation

The AuthAdapter creates a JWT with appropriate claims.

3. Token Validation

Every API request validates the token before processing.

4. Token Expiration

Tokens expire and must be refreshed.

Requesting an Access Token

When a user wants to access a resource, they follow this process:

- The user’s node requests an access token.

- If the resource is local, the node issues the token directly.

- If the resource is remote, the node authenticates with the remote node and requests a token on behalf of the user.

- The access token is returned to the user, allowing them to interact with the resource directly on its home node.

Security & Trust Model

- Access tokens are cryptographically signed to prevent tampering.

- Tokens have expiration times and scopes to limit misuse.

- Nodes validate access tokens before granting access to a resource.

Example 1: Request access to own resource

sequenceDiagram

box Alice frontend

participant Alice shell

participant Alice app

end

participant Alice node

Alice shell ->>+Alice node: Initiate access token request

Note right of Alice node: Create access token

Alice node ->>+Alice shell: Access token granted

deactivate Alice node

Alice shell ->>+Alice app: Open resource with this token

deactivate Alice shell

Alice app ->+Alice node: Use access token

loop Edit resource

Alice app --> Alice node: Edit resource

end

deactivate Alice app

- Alice opens a resource using her Cloudillo Shell

- Her shell initiates an access token request at her node

- Her node creates an access token and sends it to her shell

- Her shell gives the access token to the App Alice uses to open the resource

- The App uses the access token to edit the resource

Example 2: Request access to resource of an other identity

sequenceDiagram

box Alice frontend

participant Alice shell

participant Alice app

end

participant Alice node

participant Bob node

Alice shell ->>+Alice node: Initiate access token request

Note right of Alice node: Create signed request

Alice node ->>+Bob node: Request access token

Note right of Bob node: Verify signed request

Note right of Bob node: Create access token

deactivate Alice node

Bob node ->>+Alice node: Grant access token

deactivate Bob node

Alice node ->>+Alice shell: Access token granted

deactivate Alice node

Alice shell ->>+Alice app: Open resource with this token

deactivate Alice shell

Alice app ->+Bob node: Use access token

loop Edit resource

Alice app --> Bob node: Edit resource

end

deactivate Alice app

deactivate Bob node

- Alice opens a resource using her Cloudillo Shell

- Her shell initiates an access token request through her node

- Her node creates a signed request and sends it to Bob’s node

- Bob’s node creates an access token and sends it back to Alice’s node

- Alice’s node sends the access token to her shell

- Her shell gives the access token to the App Alice uses to open the resource

- The App uses the access token to edit the resource

Token Validation Process

Authentication Middleware

Cloudillo uses Axum middleware to validate tokens on protected routes:

Handler Patterns:

Pattern 1: Required Authentication

async fn protected_handler(auth: Auth) -> Result<Response> {

// auth.tn_id, auth.id_tag, auth.scope available

// Access granted only if middleware validated token

}

Pattern 2: Optional Authentication

async fn public_handler(auth: Option<Auth>) -> Result<Response> {

if let Some(auth) = auth {

// Authenticated user - access Auth context

} else {

// Anonymous access - no Auth context

}

}

The Axum extractor validates token before passing to handler.

If validation fails on required routes, request is rejected.

Validation Steps

When a request includes an Authorization: Bearer <token> header:

- Extract Token: Parse JWT from Authorization header

- Decode JWT: Parse header and claims (no verification yet)

- Verify Signature: Validate using AuthAdapter-stored secret

- Check Expiration: Ensure

exp > current time

- Validate Claims: Check

aud, scope, tid

- Create Auth Context: Build

Auth struct for handler

pub struct Auth {

pub tn_id: TnId, // Tenant ID (database key)

pub id_tag: String, // Identity tag (e.g., "alice.example.com")

pub scope: Vec<String>, // Permissions (e.g., ["read", "write"])

pub token_type: TokenType,

}

Axum extractors provide typed access to authentication context:

TnId Extractor:

struct TnId(pub i64) - Wraps internal tenant ID- Usage:

handler(TnId(tn_id): TnId) extracts from Auth context

IdTag Extractor:

struct IdTag(pub String) - Wraps user identity domain- Usage:

handler(IdTag(id_tag): IdTag) extracts from Auth context

Auth Extractor (Full Context):

tn_id: Internal tenant identifierid_tag: User identity (e.g., “alice.example.com”)scope: Permission vector (e.g., [“read”, “write”])token_type: Type of token (AccessToken, ProxyToken, etc.)

Usage: Check auth.scope.contains(&"write") for permission checks

Permission System

Cloudillo uses ABAC (Attribute-Based Access Control) for comprehensive permission management. Access tokens work in conjunction with ABAC policies to determine what actions users can perform.

Learn more: ABAC Permission System

Scope-Based Permissions

Access tokens include a scope claim that specifies permissions.

Resource-Level Permissions

Permissions are checked at multiple levels:

- File-Level: Who can access a file

- Database-Level: Who can access a database (RTDB)

- Action-Level: Who can see an action token

- API-Level: Rate limiting, quota enforcement

Permission Checking

Algorithm: Check Permission

Input: auth context, resource, required_scope

Output: Result<()>

1. Check token scope:

- If required_scope NOT in auth.scope: Return PermissionDenied

2. Load resource metadata:

- Fetch metadata by tn_id + resource_id

3. Check ownership:

- If metadata.owner == auth.id_tag: Return OK (owner has all)

4. Check sharing list:

- If auth.id_tag in metadata.shared_with: Return OK

5. Default: Return PermissionDenied

This pattern combines:

- Token-level permissions (scope)

- Resource-level ownership

- Resource-level sharing permissions

Cross-Instance Authentication

ProxyToken Flow

When Alice (on instance A) wants to access Bob’s resource (on instance B):

- Alice’s client requests access from instance A

- Instance A creates a ProxyToken signed with its profile key

- Instance A sends ProxyToken to instance B:

POST /api/auth/proxy

- Instance B validates ProxyToken:

- Fetches instance A’s public key

- Verifies signature

- Checks expiration

- Instance B creates AccessToken for Alice

- Instance B returns AccessToken to instance A

- Instance A returns AccessToken to Alice’s client

- Alice’s client uses AccessToken to access Bob’s resource directly on instance B

ProxyToken Verification

Algorithm: Verify ProxyToken

Input: JWT token string, requester_id_tag

Output: Result<ProxyTokenClaims>

1. Decode JWT without verification (read claims)

2. Fetch requester's profile:

- GET /api/me from requester's instance

- Extract public keys from profile

3. Find signing key:

- Look up key by key_id (kid) in claims

- If not found: Return KeyNotFound error

4. Verify signature:

- Use requester's public key to verify JWT signature

5. Check expiration:

- If exp < current_time: Return TokenExpired

6. Return verified claims

Token Generation

Creating an Access Token

Algorithm: Create Access Token

Input: tn_id, id_tag, resource_id, scope array, duration

Output: JWT token string

1. Build AccessTokenClaims:

- sub: User identity (id_tag)

- aud: Resource identifier (resource_id)

- exp: current_time + duration

- iat: current_time

- scope: scope array joined as space-separated string

- tid: Tenant ID (tn_id)

2. Sign JWT:

- Use AuthAdapter to create JWT

- Signed with instance's private key

3. Return token string

Token Refresh

Access tokens can be refreshed before expiration:

POST /api/auth/refresh

Authorization: Bearer <expiring_token>

Response:

{

"access_token": "eyJhbGc...",

"expires_in": 3600

}

API Reference

POST /api/auth/token

Request an access token.

Request:

POST /api/auth/token

Content-Type: application/json

{

"resource_id": "f1~abc123...",

"scope": "read write",

"duration": 3600

}

Response (200 OK):

{

"access_token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9...",

"token_type": "Bearer",

"expires_in": 3600,

"scope": "read write"

}

POST /api/auth/refresh

Refresh an expiring access token.

Request:

POST /api/auth/refresh

Authorization: Bearer <current_token>

Response (200 OK):

{

"access_token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9...",

"expires_in": 3600

}

POST /api/auth/proxy

Request an access token on behalf of a user (cross-instance).

Request:

POST /api/auth/proxy

Content-Type: application/json

Authorization: Bearer <proxy_token>

{

"user_id_tag": "alice.example.com",

"resource_id": "f1~xyz789...",

"scope": "read"

}

Response (200 OK):

{

"access_token": "eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9...",

"expires_in": 3600

}

Security Considerations

Token Storage

Client-Side:

- Store in memory or sessionStorage (not localStorage for better security)

- Never log tokens

- Clear on logout

Server-Side:

- JWT signing secrets in AuthAdapter (never exposed)

- Rotate signing secrets periodically

- Use strong secrets (256+ bits)

Token Transmission

- Always use HTTPS/TLS

- Include in

Authorization: Bearer <token> header

- Never in URL query parameters

Token Revocation

Since JWTs are stateless, revocation requires:

- Short Lifetimes: Limit damage window (1-24 hours)

- Blacklisting: Maintain revoked token list (for critical cases)

- Key Rotation: Invalidates all tokens signed with old key

Rate Limiting

Protect token endpoints from abuse:

Token Endpoint Rate Limits:

See Also

ABAC Permission System

Cloudillo uses Attribute-Based Access Control (ABAC) to provide flexible, fine-grained permissions across all resources. This system moves beyond simple role-based access control to enable sophisticated permission rules based on attributes of users, resources, and context.

What is ABAC?

Attribute-Based Access Control determines access by evaluating attributes rather than fixed roles. Instead of asking “Does this user have the admin role?”, ABAC asks “Does this user’s attributes match the policy rules for this resource?”

Benefits Over RBAC

Role-Based Access Control (RBAC):

if user.role == "admin" → allow

if user.role == "editor" → allow read/write

if user.role == "viewer" → allow read

Fixed roles, limited flexibility

Attribute-Based Access Control (ABAC):

if user.connected_to(resource.owner) AND resource.visibility == "connected" → allow

if resource.visibility == "public" → allow

if current_time < resource.expires_at → allow

Flexible rules based on any attributes

Key Advantages

✅ Fine-Grained Control: Permission rules can use any attribute

✅ Context-Aware: Decisions based on time, location, relationship status

✅ Scalable: No need to create roles for every permission combination

✅ Decentralized: Resource owners define their own permission rules

✅ Expressive: Complex boolean logic with AND/OR operators

The Four-Object Model

ABAC decisions involve four types of objects:

1. Subject (Who)

The user or entity requesting access.

pub struct AuthCtx {

pub tn_id: TnId, // Tenant ID (database key)

pub id_tag: String, // Identity (e.g., "alice.example.com")

pub roles: Vec<String>, // Roles (e.g., ["user", "moderator"])

}

Attributes Available:

tn_id - Internal tenant identifierid_tag - Public identity tagroles - Assigned roles- Relationship to resource owner (computed at runtime)

Example:

Subject:

id_tag: "bob.example.com"

roles: ["user"]

2. Action (What)

The operation being attempted.

Format: resource:operation

Common Actions:

file:read → Read a file

file:write → Modify a file

file:delete → Delete a file

action:create → Create an action token

action:delete → Delete an action token

profile:update → Update a profile

profile:admin → Administrative access to profile

Format: resource:operation (e.g., file:read, action:create, profile:admin)

3. Object (Resource)

The resource being accessed. Must implement AttrSet trait to provide queryable attributes.

pub trait AttrSet {

fn get_attr(&self, key: &str) -> Option<Value>;

}

Example - File Resource:

struct FileMetadata {

file_id: String,

owner: String, // "alice.example.com"

visibility: Visibility, // public, private, followers, etc.

created_at: i64,

size: u64,

shared_with: Vec<String>,

}

Implementing AttrSet:

FileMetadata.get_attr(key):

switch key:

case "owner":

return Value::String(self.owner)

case "visibility":

return Value::String(self.visibility)

case "created_at":

return Value::Number(self.created_at)

case "size":

return Value::Number(self.size)

default:

return None

Attributes Available (depends on resource type):

owner - Resource owner’s identityvisibility - Visibility levelcreated_at - Creation timestampaudience - Intended audience (for actions)shared_with - List of identities with explicit access- Any custom attributes defined by the resource

4. Environment (Context)

Contextual factors like time, location, or system state.

pub struct Environment {

pub current_time: i64, // Unix timestamp

pub request_origin: Option<String>, // Future: IP, location

pub system_load: Option<f32>, // Future: rate limiting context

}

Attributes Available:

current_time - Current Unix timestamp- Future: IP address, geographic location, system load

Example:

Environment:

current_time: 1738483200

request_origin: null

system_load: null

Visibility Levels

Cloudillo defines six visibility levels that determine who can access a resource. Stored as a single character in the database (or NULL for direct).

Hierarchy (Most to Least Permissive)

| Code |

Level |

Description |

P |

Public |

Anyone, including unauthenticated users |

V |

Verified |

Any authenticated user from any federated instance |

2 |

SecondDegree |

Friend of friend (reserved for voucher token system) |

F |

Follower |

Authenticated users who follow the owner |

C |

Connected |

Authenticated users with mutual connection |

NULL |

Direct |

Only owner + explicit audience |

1. P - Public

Everyone can access the resource, even unauthenticated users.

Use Cases:

- Public blog posts

- Open documentation

- Community announcements

- Shared files with public links

Permission Logic:

if resource.visibility == 'P':

return ALLOW

Example:

FileMetadata:

owner: "alice.example.com"

visibility: 'P'

# Anyone can read this file

2. V - Verified

Authenticated users from any federated instance can access the resource.

Use Cases:

- Semi-public posts requiring login

- Community content for logged-in users

- Content protected from anonymous scraping

Permission Logic:

if resource.visibility == 'V':

if subject.is_authenticated:

return ALLOW

else:

return DENY

Example:

ActionToken:

owner: "alice.example.com"

visibility: 'V'

type: "POST"

content: "Only for logged-in users"

# Accessible to any authenticated user

3. 2 - SecondDegree (Reserved)

Friend of friend access - authenticated users who are connected to someone who is connected to the owner.

Info

This level is reserved for future implementation via a voucher token system.

Use Cases:

- Extended network visibility

- Gradual trust expansion

- Community discovery

4. F - Follower

Followers of the owner can access the resource (plus the owner).

Use Cases:

- Social media posts visible to followers

- Updates shared with audience

- Blog posts for subscribers

Permission Logic:

if resource.owner == subject.id_tag:

return ALLOW

if subject.follows(resource.owner):

return ALLOW

else:

return DENY

Checking Following Status:

is_following = meta_adapter.has_action(subject.tn_id, "FLLW", resource.owner)

# Checks if subject has a FLLW action token for the resource owner

Example:

ActionToken:

owner: "alice.example.com"

visibility: 'F'

type: "POST"

content: "Hello followers!"

# Accessible to anyone who follows alice

5. C - Connected

Connected users can access the resource (mutually connected users + owner).

A connection exists when both parties have issued CONN action tokens to each other.

Use Cases:

- Private conversations

- Shared documents between colleagues

- Connection-only updates

- Close friends content

Permission Logic:

if resource.owner == subject.id_tag:

return ALLOW

if bidirectional_connection(subject.id_tag, resource.owner):

return ALLOW

else:

return DENY

Checking Connection Status:

# Check if both users have CONN tokens for each other

alice_to_bob = meta_adapter.has_action(alice_tn_id, "CONN", "bob.example.com")

bob_to_alice = meta_adapter.has_action(bob_tn_id, "CONN", "alice.example.com")

connected = alice_to_bob AND bob_to_alice

Example:

FileMetadata:

owner: "alice.example.com"

visibility: 'C'

file_name: "project-proposal.pdf"

# Only users connected to alice can access

6. NULL - Direct (Audience-Based)

Most restrictive - only the owner and users explicitly listed in the audience field can access.

Use Cases:

- Direct messages to specific users

- Files shared with specific people

- Invitations to specific identities

- Private resources

Permission Logic:

if resource.owner == subject.id_tag:

return ALLOW

if resource.audience.contains(subject.id_tag):

return ALLOW

else:

return DENY

Example:

ActionToken:

owner: "alice.example.com"

type: "MSG"

content: "Hi Bob!"

audience: ["bob.example.com"]

visibility: NULL

# Only alice and bob can see this

Subject Access Levels

While Visibility Levels (P/V/2/F/C/NULL) define who can see a resource, Subject Access Levels are computed attributes that describe the relationship between a requesting user and a resource owner. These levels are used during permission evaluation.

Access Level Hierarchy

| Level |

Code |

Description |

Owner |

6 |

The user owns the resource |

Connected |

5 |

Mutual connection with owner (bidirectional CONN) |

Follower |

4 |

Follows the owner (unidirectional FLLW) |

SecondDegree |

3 |

Friend of a friend (reserved for future voucher system) |

Verified |

2 |

Authenticated user from any instance |

Public |

1 |

Unauthenticated or anonymous user |

None |

0 |

No access (blocked or unknown) |

How Access Levels Are Computed

compute_access_level(subject, resource_owner):

# Check if subject is the owner

if subject.id_tag == resource_owner:

return AccessLevel::Owner

# Check for mutual connection

if has_bidirectional_connection(subject.id_tag, resource_owner):

return AccessLevel::Connected

# Check if subject follows owner

if subject_follows(subject.id_tag, resource_owner):

return AccessLevel::Follower

# Check second-degree connection (future)

if has_second_degree_connection(subject.id_tag, resource_owner):

return AccessLevel::SecondDegree

# Check if authenticated

if subject.is_authenticated:

return AccessLevel::Verified

# Unauthenticated user

return AccessLevel::Public

Access Level vs Visibility

The permission check compares the computed access level against the required visibility:

check_access(subject, resource):

access_level = compute_access_level(subject, resource.owner)

required_level = visibility_to_access_level(resource.visibility)

return access_level >= required_level

Visibility to Access Level mapping:

| Visibility |

Required Access Level |

P (Public) |

Public (1) |

V (Verified) |

Verified (2) |

2 (SecondDegree) |

SecondDegree (3) |

F (Follower) |

Follower (4) |

C (Connected) |

Connected (5) |

NULL (Direct) |

Owner or explicit audience |

Attribute Set Implementations

Cloudillo implements the AttrSet trait for different resource types, providing consistent attribute access for permission evaluation.

ProfileAttrs

Attributes for user profile resources:

struct ProfileAttrs {

id_tag: String, // "alice.example.com"

owner: String, // Same as id_tag for profiles

visibility: char, // Profile visibility setting

created_at: i64, // Profile creation timestamp

verified: bool, // Is identity verified?

roles: Vec<String>, // Assigned roles

}

impl AttrSet for ProfileAttrs {

fn get_attr(&self, key: &str) -> Option<Value> {

match key {

"id_tag" | "owner" => Some(Value::String(self.id_tag)),

"visibility" => Some(Value::Char(self.visibility)),

"created_at" => Some(Value::Number(self.created_at)),

"verified" => Some(Value::Bool(self.verified)),

"roles" => Some(Value::Array(self.roles)),

_ => None

}

}

}

ActionAttrs

Attributes for action token resources:

struct ActionAttrs {

action_id: String, // "a1~xyz789..."

owner: String, // Issuer identity

action_type: String, // "POST", "CMNT", "REACT", etc.

audience: Option<String>, // Intended recipient

visibility: Option<char>, // P/V/2/F/C/NULL

parent_id: Option<String>, // Parent action (for threading)

created_at: i64, // iat claim timestamp

status: char, // A/C/N/D

}

impl AttrSet for ActionAttrs {

fn get_attr(&self, key: &str) -> Option<Value> {

match key {

"id" => Some(Value::String(self.action_id)),

"owner" | "issuer" => Some(Value::String(self.owner)),

"type" => Some(Value::String(self.action_type)),

"audience" => self.audience.as_ref().map(|a| Value::String(a)),

"visibility" => self.visibility.map(|v| Value::Char(v)),

"parent" => self.parent_id.as_ref().map(|p| Value::String(p)),

"created_at" => Some(Value::Number(self.created_at)),

"status" => Some(Value::Char(self.status)),

_ => None

}

}

}

FileAttrs

Attributes for file/blob resources:

struct FileAttrs {

file_id: String, // "f1~abc123..."

owner: String, // File owner identity

visibility: char, // P/V/F/C/NULL

mime_type: String, // "image/jpeg", "application/pdf"

size: u64, // File size in bytes

created_at: i64, // Upload timestamp

shared_with: Vec<String>, // Explicit share list

}

impl AttrSet for FileAttrs {

fn get_attr(&self, key: &str) -> Option<Value> {

match key {

"id" => Some(Value::String(self.file_id)),

"owner" => Some(Value::String(self.owner)),

"visibility" => Some(Value::Char(self.visibility)),

"mime_type" => Some(Value::String(self.mime_type)),

"size" => Some(Value::Number(self.size as i64)),

"created_at" => Some(Value::Number(self.created_at)),

"shared_with" => Some(Value::Array(self.shared_with)),

_ => None

}

}

}

SubjectAttrs

Attributes for the requesting subject (user context):

struct SubjectAttrs {

id_tag: String, // "bob.example.com"

tn_id: TnId, // Internal tenant ID

is_authenticated: bool,

roles: Vec<String>, // ["user", "moderator"]

access_level: AccessLevel, // Computed for current resource

}

impl AttrSet for SubjectAttrs {

fn get_attr(&self, key: &str) -> Option<Value> {

match key {

"id_tag" => Some(Value::String(self.id_tag)),

"authenticated" => Some(Value::Bool(self.is_authenticated)),

"roles" => Some(Value::Array(self.roles)),

"access_level" => Some(Value::Number(self.access_level as i64)),

_ => None

}

}

}

Using Attribute Sets in Policies

# Policy rule using attribute sets

Rule:

Condition:

(object.visibility == 'F') AND

(subject.access_level >= 4) # Follower or higher

Effect: ALLOW

# Another rule combining multiple attributes

Rule:

Condition:

(object.type == 'POST') AND

(object.owner == subject.id_tag OR subject.HasRole('moderator'))

Effect: ALLOW_DELETE

Policy Structure

ABAC uses two-level policies to define permission boundaries:

TOP Policy (Constraints)

Defines maximum permissions - what is never allowed.

Example:

TopPolicy:

Rule 1:

Condition: visibility == "public" AND size > 100MB

Effect: DENY

# Files larger than 100MB cannot be shared publicly

Rule 2:

Condition: created_at < (current_time - 86400)

Effect: DENY_WRITE

# Action tokens cannot be modified after 24 hours

BOTTOM Policy (Guarantees)

Defines minimum permissions - what is always allowed.

Example:

BottomPolicy:

Rule 1:

Condition: subject.id_tag == resource.owner

Effect: ALLOW

# Owner can always access their own resources

Rule 2:

Condition: visibility == "public" AND action == "read"

Effect: ALLOW

# Public resources are always readable

Default Rules

Between TOP and BOTTOM policies, default rules apply based on visibility and ownership:

default_permission_check(subject, action, object):

1. Check ownership:

if object.owner == subject.id_tag

return ALLOW

2. Check visibility:

switch object.visibility:

case 'P': # Public

if action ends with ":read"

return ALLOW

case 'V': # Verified

if subject.is_authenticated

return ALLOW

return DENY

case '2': # SecondDegree

return check_second_degree(subject, object)

case 'F': # Follower

return check_following(subject, object)

case 'C': # Connected

return check_connection(subject, object)

case NULL: # Direct

return check_audience(subject, object)

3. Default deny

return DENY

Policy Operators

ABAC supports various operators for building permission rules:

Comparison Operators

Equals

visibility == "public"

subject.id_tag == resource.owner

NotEquals

GreaterThan / LessThan

size > 1,000,000

created_at < current_time

GreaterThanOrEqual / LessThanOrEqual

Set Operators

Contains

"public" IN tags

subject.id_tag IN shared_with

NotContains

subject.id_tag NOT IN blocked_users

In

subject.role IN ["admin", "moderator"]

Role Operator

HasRole

subject.HasRole("admin")

subject.HasRole("moderator")

Logical Operators

And

(published == true) AND (visibility == "public")

Or

(subject.id_tag == resource.owner) OR (subject.HasRole("admin"))

Permission Evaluation Flow

When a permission check is requested:

1. Load Subject (user context from JWT)

↓

2. Load Object (resource with attributes)

↓

3. Load Environment (current time, etc.)

↓

4. Check TOP Policy (maximum permissions)

├─ If denied → return Deny

└─ If allowed → continue

↓

5. Check BOTTOM Policy (minimum permissions)

├─ If allowed → return Allow

└─ If not matched → continue

↓

6. Check Default Rules

├─ Ownership check

├─ Visibility check

└─ Relationship checks

↓

7. Return Decision (Allow or Deny)

Evaluation Example

Request: Bob wants to read Alice’s file

Subject:

id_tag: "bob.example.com"

roles: ["user"]

Action: "file:read"

Object:

owner: "alice.example.com"

visibility: "connected"

file_id: "f1~abc123"

Environment:

current_time: 1738483200

Evaluation:

1. TOP Policy: No blocking rules → continue

2. BOTTOM Policy: Not owner → continue

3. Default Rules:

a. Is owner? No (alice ≠ bob)

b. Visibility = "connected"

c. Check connection:

- Alice has CONN to Bob? Yes

- Bob has CONN to Alice? Yes

- Result: Connected!

d. Action is "read"? Yes

→ ALLOW

Integration with Routes

Cloudillo uses permission middleware to enforce ABAC on HTTP routes:

Permission Middleware Layers

Routes are configured with permission middleware to enforce access control:

Protected Routes:

# Actions

GET /api/action + check_perm_action("read")

POST /api/action + check_perm_action("create")

DEL /api/action/:id + check_perm_action("delete")

# Files

GET /api/file/:id + check_perm_file("read")

PATCH /api/file/:id + check_perm_file("write")

DEL /api/file/:id + check_perm_file("delete")

# Profiles

PATCH /api/profile/:id + check_perm_profile("update")

PATCH /api/admin/profile/:id + check_perm_profile("admin")

Each middleware checks permissions before the handler executes.

Middleware Implementation

The permission middleware follows this flow:

check_perm_action(action):

1. Extract auth context from request

2. Extract resource ID from request path

3. Load resource from storage adapter

4. Call abac::check_permission(auth, "action:{action}", resource, environment)

5. If allowed: proceed to next middleware/handler

6. If denied: return Error::PermissionDenied

This middleware is applied to each route requiring permission checks (see Permission Middleware Layers above).

Examples

Example 1: Public File Access

Alice creates a public file:

POST /api/file/image/logo.png

Authorization: Bearer <alice_token>

Body: <image data>

Response:

file_id: "f1~abc123"

visibility: "public"

Bob reads the file (no connection needed):

GET /api/file/f1~abc123

Permission Check:

Subject: bob.example.com

Action: file:read

Object: { owner: alice, visibility: public }

Decision: ALLOW (public resources readable by anyone)

Example 2: Connected-Only File

Alice creates a connected-only file:

POST /api/file/document/private-notes.pdf

Authorization: Bearer <alice_token>

Body: { visibility: "connected" }

Response:

file_id: "f1~xyz789"

visibility: "connected"

Bob tries to read (not connected):

GET /api/file/f1~xyz789

Authorization: Bearer <bob_token>

Permission Check:

Subject: bob

Action: file:read

Object: { owner: alice, visibility: connected }

Connection: alice ↔ bob? NO

Decision: DENY

Charlie tries to read (connected):

GET /api/file/f1~xyz789

Authorization: Bearer <charlie_token>

Permission Check:

Subject: charlie

Action: file:read

Object: { owner: alice, visibility: connected }

Connection: alice ↔ charlie? YES

Decision: ALLOW

Example 3: Role-Based Access

Admin deletes any profile:

DELETE /api/admin/profile/bob.example.com

Authorization: Bearer <admin_token>

Permission Check:

Subject: admin (roles: [admin])

Action: profile:admin

Object: { owner: bob }

HasRole("admin")? YES

Decision: ALLOW

Regular user tries same action:

DELETE /api/admin/profile/bob.example.com

Authorization: Bearer <alice_token>

Permission Check:

Subject: alice (roles: [user])

Action: profile:admin

HasRole("admin")? NO

Decision: DENY

Example 4: Time-Based Access

Define policy: documents expire after 30 days

TopPolicy:

Rule:

Condition: (expires_at < current_time) AND (action == "read")

Effect: DENY

Bob tries to read expired document:

GET /api/file/f1~old123

Permission Check:

Subject: bob

Action: file:read

Object: {

owner: alice

visibility: public

expires_at: 1738400000 (in the past)

}

Environment: { current_time: 1738483200 }

TOP Policy check:

1738400000 < 1738483200? YES

Decision: DENY (expired)

Implementing Custom Policies

Adding a Custom Policy

Define a custom policy for team files:

create_team_policy():

top_rules:

Rule 1:

Condition: (type == "team") AND (visibility == "public")

Effect: DENY

Description: "Team files must not be public"

bottom_rules:

Rule 1:

Condition: (type == "team") AND (subject.id_tag IN members) AND (action == "read")

Effect: ALLOW

Description: "Team members can read team files"

Apply in handler:

get_team_file(auth, file_id):

file = meta_adapter.read_file(file_id)

allowed = abac::check_permission_with_policy(

auth,

"file:read",

file,

Environment::current(),

create_team_policy()

)

if NOT allowed:

return Error::PermissionDenied

return serve_file(file)

Defining Custom Attributes

struct TeamFile {

file_id: String

owner: String

team_id: String

members: List[String]

file_type: String

}

Implementing AttrSet trait:

TeamFile.get_attr(key):

switch key:

case "owner":

return Value::String(self.owner)

case "team_id":

return Value::String(self.team_id)

case "members":

return Value::Array(self.members)

case "type":

return Value::String(self.file_type)

default:

return None

Caching Relationship Checks

Following/connection checks can be expensive. Cache results to avoid repeated database queries:

RelationshipCache:

ttl: Duration (cache validity)

cache: HashMap[(user_a, user_b) → (bool, timestamp)]

check_connection(user_a, user_b):

1. Check cache for (user_a, user_b)

if found AND cached_at.elapsed() < ttl

return cached_result

2. Query database

connected = check_connection_db(user_a, user_b)

3. Cache result

cache[(user_a, user_b)] = (connected, now)

4. Return result

Optimizing Policy Evaluation

For complex policies, evaluate cheapest conditions first:

// Bad: Expensive database check first

And(vec![

Attr("members").Contains(subject.id_tag), // DB query

Attr("type").Equals("team"), // Cheap

])

// Good: Cheap check first

And(vec![

Attr("type").Equals("team"), // Cheap, fails fast

Attr("members").Contains(subject.id_tag), // Only if needed

])

Permission Check Batching

When checking permissions for multiple resources, batch-fetch relationships to minimize database queries:

check_permissions_batch(auth, action, resources):

1. Extract all resource owners

owners = {r.owner for r in resources}

2. Pre-fetch all relationships

relationships = fetch_relationships_batch(auth.id_tag, owners)

# Fetches all FLLW/CONN tokens in one query

3. Check each resource

for resource in resources:

permission = check_permission_with_cache(

auth, action, resource, relationships

)

results.append(permission)

4. Return results

This avoids N+1 queries where each permission check would require separate lookups.

Security Best Practices

1. Default Deny

Always default to denying access unless explicitly allowed:

Good Pattern:

check_permission(...):

check all explicit allow conditions

if any match: return ALLOW

otherwise: return DENY

Bad Pattern:

check_permission(...):

check some conditions

forget to handle unknown cases

accidentally allows access

2. Validate on Both Sides

Check permissions in both locations:

Example flow:

Client:

if canDeleteFile(auth, file):

show delete button (UX convenience)

Server:

delete_file(auth, file_id):

file = load_file(file_id)

if NOT check_permission(auth, "file:delete", file):

return Error::PermissionDenied

// Safe to proceed with deletion

3. Audit Permission Denials

Log all permission denials for security monitoring:

if NOT allowed:

log warning:

subject: auth.id_tag

action: action

resource: resource.id

timestamp: current_time

reason: "ABAC permission denial"

return Error::PermissionDenied

Log fields should include subject identity, action attempted, resource ID, and timestamp for audit trails.

4. Test Permission Policies

Write comprehensive tests for all permission scenarios:

test_connected_file_access():

# Setup

alice = create_user("alice")

bob = create_user("bob")

charlie = create_user("charlie")

create_connection(alice, bob) # Alice ↔ Bob

file = create_file(alice, visibility="connected")

# Test cases

assert check_permission(alice, "file:read", file) # Owner → ALLOW

assert check_permission(bob, "file:read", file) # Connected → ALLOW

assert NOT check_permission(charlie, "file:read", file) # Not connected → DENY

Test all visibility levels (P, V, 2, F, C, NULL) and edge cases (expired resources, role-based access, custom attributes).

Troubleshooting

Permission Denied But Should Be Allowed

Debugging steps:

-

Check visibility level:

visibility = resource.visibility

# Expected: 'P' | 'V' | '2' | 'F' | 'C' | NULL

# (Public | Verified | SecondDegree | Follower | Connected | Direct)

-

Check ownership:

owner = resource.owner

subject_id = subject.id_tag

# If owner == subject_id, should have full access

-

Check relationship status:

following = check_following(subject, resource)

connected = check_connection(subject, resource)

# Verify FLLW and/or CONN tokens exist if needed

-

Check action format:

Wrong: Action("read") # Missing resource type

Correct: Action("file:read") # Includes resource type format

-

Enable debug logging:

RUST_LOG=cloudillo::core::abac=debug cargo run

# Shows decision flow and matched rules

Relationship Checks Not Working

Common issues:

-

Missing action tokens: Ensure FLLW/CONN tokens exist

Check for FLLW token: meta_adapter.read_action(tn_id, "FLLW", target)

Check for CONN token: meta_adapter.read_action(tn_id, "CONN", target)

# If not found, relationship check will return false

-

Unidirectional connection: Both sides need CONN tokens

Alice → Bob: CONN token exists

Bob → Alice: CONN token MISSING

Result: NOT connected (requires bidirectional tokens)

-

Cache staleness: Clear relationship cache if stale

relationship_cache.clear()

# Cache holds results for TTL duration; manually clear if needed

See Also